marios@ ~]$ /var/log

The Deltacloud API is missing the management of network resources. Many IaaS APIs still don’t have a notion of ‘network’ resource management whilst for others the Network API is relatively new. However, the Deltacoud project has received requests for the addition of network management related functionality and we’ve been discussing this on and off for the past year or so. Towards the end of 2012 we made a first attempt with some network models to fuel discussion and this blog post introduces the second iteration of the code for further comments.

Each member of the ‘networks team’ took ‘ownership’ of a particular cloud provider to discuss how the initial model would ‘fit’ against that provider’s network API. This etherpad has some of our preliminary notes; Jan Provaznik looked at RHEV-M, Michal Fojtik looked at vSphere, Dies Koper looked at FGCP, Francesco Vollero looked at EC2 and I looked at OpenStack and CIMI. Tomas Sedovic acted as UN weapons inspector and sanity checker.

Angus Thomas developed the use cases to guide development. For this first iteration I focused on the simplest use case: “Place an Instance into a particular Network”. Furthermore, I decided to focus on putting down some fully working code for EC2 and OpenStack to explore the feasibility of the Networks abstractions and guide further development for the other drivers.

The Network Models

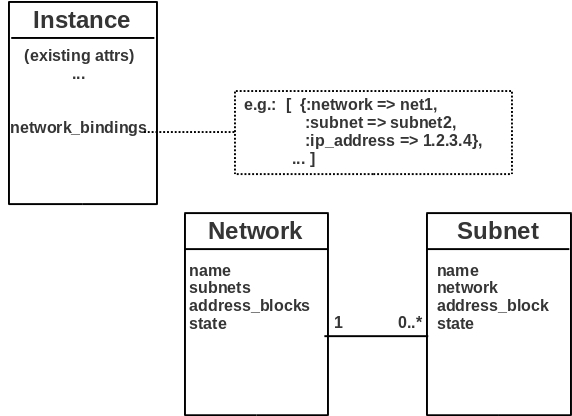

The original model had three entities - Network, Subnet and Port. The ‘Port’ entity proved to be problematic, because for some cloud providers this represented a ‘switch port’ whereas for others it represented a ‘network interface’. In Deltacloud we just want to model the connection of an Instance to a Network. So I decided to remove the Port model altogether and replace it with an ‘instance_bindings’ attribute on the Instance resource. This attempts to abstract away or reconcile the differences between the two ‘types’ of Port resource found in the various cloud provider APIs.

As I attempt to illustrate above, Network and Subnet remain as stand alone resources implying full CRUD operations for these where supported. The Network and Subnet models are pretty similar with respect to their attributes. One not so obvious distinction worth noting is that Network has an ‘address_blocks’ attribute whereas Subnet has an ‘address_block’ attribute. This is because for Openstack Quantum, the Subnets that are associated with a given Network do not have to utilise/occupy contiguous CIDR address blocks - that is it is perfectly legal for one subnet to use “10.0.0.0/8” and the other to use “192.168.0.0/16”. These two address blocks cannot be described using a single CIDR string. Hence, for Network, address_blocks is an array of CIDR address strings to accommodate for this difference.

The Instance model is extended with the network_bindings attribute - an array - because an Instance can be associated with more than one Network (in some providers). Each network_binding contains a reference to the network and subnet as well as the IP address resulting from this association. Moving forward we can either extend this abstraction to contain more attributes or if necessary and feasible, extract it back into a stand-alone entity.

Common Operations and Driver Patterns

Networks

For EC2 the Network resource is mapped to the EC2 VPC, whilst for Openstack it is mapped to the Quantum Network. In EC2, each Netowrk (VPC) has a ‘cidr’ attribute whereas for Openstack a Quantum network does not (cidr is present on the Quantum Subnet resource). For Openstack, each Network ‘knows’ which subnets belong to it, whereas for EC2 this information must be deduced by filtering the Subnets.

Populating the list of Network resources in the case of EC2:

def networks(credentials, opts={})

ec2 = new_client(credentials)

networks = []

safely do

subnets = subnets(credentials) #get all subnets once

ec2.describe_vpcs.each do |vpc|

vpc_subnets = subnets.inject([]){|res,cur| res<<cur if cur.network==vpc[:vpc_id] ;res} #collect subnets for this.network

networks << convert_vpc(vpc, vpc_subnets)

end

end

networks = filter_on(networks, :id, opts)

end

def convert_vpc(vpc, subnets=[])

addr_blocks = subnets.inject([]){|res,cur| res << cur.address_block ; res}

Network.new({ :id => vpc[:vpc_id],

:name => vpc[:vpc_id],

:state=> vpc[:state],

:subnets => subnets.inject([]){|res,cur| res << cur.id ;res},

:address_blocks=> (addr_blocks.empty? ? [vpc[:cidr_block]] : addr_blocks) })

end

In other words, you retrieve the list of subnets, then for each VPC returned create a Deltacloud::Network resource using the VPC and the subset of the Subnets that are associated with that. One distinction/point of discussion here is that for the ‘addr_blocks’ attribute (cidr), when there are subnets in the given VPC, I only report the CIDR addresses of those subnets in address_blocks. The alternative is to simply use the :cidr_block of the entire VPC, but it made more sense to me to report only the addresses that an Instance can actually use at a given time.

For Openstack however, each Network resource ‘knows’ which subnets belong to it. However, a Network does not ‘know’ about the CIDR address blocks in use on those subnets. Hence:

def networks(credentials, opts={})

os = new_client(credentials, "network")

networks = []

safely do

subnets = os.subnets

os.networks.each do |net|

addr_blocks = get_address_blocks_for(net.id, subnets)

networks << convert_network(net, addr_blocks)

end

end

def get_address_blocks_for(network_id, subnets)

return [] if subnets.empty?

addr_blocks = []

subnets.each do |sn|

if sn.network_id == network_id

addr_blocks << sn.cidr

end

end

addr_blocks

end

def convert_network(net, addr_blocks)

Network.new({ :id => net.id,

:name => net.name,

:subnets => net.subnets,

:state => (net.admin_state_up ? "UP" : "DOWN"),

:address_blocks => addr_blocks

})

end

In other words, you must retrieve the list of subnets to determine the CIDR address blocks for those subnets belonging to the given Network, before converting the result into a Deltacloud::Network.

One final implication of the difference between EC2 VPC ‘knowing’ about CIDR and Openstack Network not knowing, is that creation of a Network in EC2 demands a cidr block. This difference will have to be somehow reconciled - either by making ‘cidr’ a compulsory parameter that is ignored for Openstack, or making it optional and choosing a default for EC2 when it isn’t supplied. We could also advertise the difference using a driver ‘feature’.

Subnets

Listing of subnets is thankfully more straightforward without any big differences between the two providers. Each returned subnet resource contains all the attributes required to build the Deltacloud::Subnet. In both cases, a subnet ‘knows’ about the Network it belongs to as well as the CIDR block it occupies/uses. For the create operation in both cases you must supply network_id and address_block parameters:

def create_subnet(credentials, opts={})

ec2 = new_client(credentials)

safely do

subnet = ec2.create_subnet(opts[:network_id], opts[:address_block])

convert_subnet(subnet)

end

end

def convert_subnet(subnet)

Subnet.new({ :id => subnet[:subnet_id],

:name => subnet[:subnet_id],

:network =>subnet[:vpc_id],

:address_block => subnet[:cidr_block],

:state => subnet[:state] })

end

Instances

The Instance operations impacted for this initial use case are creation, listing (retrieval) as well as deletion. When creating an Instance a client must provide the network_id and subnet_id parameters.

For EC2, placing an Instance into a particular Network during Instance creation is very straightforward, because the EC2 compute and network APIs are exposed via the same interface (the EC2 API). You simply pass the ‘subnet_id’ parameter to the EC2 instance creation operation and extract the required ‘network_bindings’ from the response:

def convert_instance(instance)

...

if instance[:vpc_id]

inst_params.merge!(:network_bindings => [{:network=>instance[:vpc_id], :subnet=>instance[:subnet_id], :ip_address=> instance[:aws_private_ip_address]}])

end

Instance.new(inst_params)

end

However, in the case of Openstack, the Quantum and Nova APIs do not have such a straightforward interface. To place an Instance into a particular Network, you must first create the Instance, then create a Quantum Port on the required Network and Subnet and then set the Port to refer to the created Instance:

def create_instance(credentials, image_id, opts)

(...)

safely do

server = os.create_server(params)

net_bind = []

if opts[:network_id] && opts[:subnet_id] && (quantum=have_quantum?(credentials)) #place instance into a network

port = quantum.create_port(opts[:network_id], {"fixed_ips"=>[{"subnet_id"=>opts[:subnet_id]}], "device_id"=>server.id})

net_bind = [{:network=>opts[:network], :subnet=>opts[:subnet_id], :ip_address=>port.fixed_ips.first["ip_address"]}]

server.refresh

end

result = convert_from_server(server, os.connection.authuser, get_attachments(server.id, os), net_bind)

end

result

end

For the same reasons (interplay between compute and network APIs), creation of the network_bindings attribute when listing Instance(s) is much simpler for EC2 - you simply extract the required information from the EC2 response. For Openstack however you must deduce the network bindings by retrieving a list of Quantum Ports and discovering which of those refers to the given Instance:

def instances(credentials, opts={})

(...)

insts = attachments = ports = []

safely do

if quantum = have_quantum?(credentials)

ports = quantum.ports

end

(...)

insts = os.list_servers_detail.collect do |s|

net_bind = get_net_bindings_for(s[:id], ports)

convert_from_server(s, os.connection.authuser,get_attachments(s[:id], os), net_bind)

end

end

end

insts = filter_on( insts, :state, opts )

insts

end

def get_net_bindings_for(server_id, ports)

return [] if ports.empty?

net_bind = []

ports.each do |port|

if port.device_id == server_id

port.fixed_ips.each do |fix_ip|

net_bind << {:network=> port.network_id, :subnet=>fix_ip["subnet_id"] , :ip_address=>fix_ip["ip_address"]}

end

end

end

net_bind

end

A final note is that for the destroy_instance operation in the Openstack driver I added an extra ‘cleanup’ step to destroy any ports associated with the given Instance:

def destroy_instance(credentials, instance_id)

os = new_client(credentials)

server = instance = nil

safely do

server = os.get_server(instance_id)

server.delete!

if quantum = have_quantum?(credentials) #destroy ports if any

quantum.ports.each do |port|

if port.device_id == server.id

quantum.delete_port(port.id)

end

end

end

(...)

end

Patches

The patches implementing this functionality have been sent to the dev@deltacloud.apache.org mailing list. They are also available from tracker as set 395. One note is that in order to use the Openstack Quantum code you need to build from my fork of ruby-openstack; I’ll be releasing a newer version of the gem (1.10) that includes this functionality in the next few days.

Note that the current patches have incomplete ‘views’ - in particular the XML/JSON views are not fully implemented. I mainly used the HTML interface for testing and so the HTML UI should be used to review this set for now.

Open Issues/Questions

- How do these revised models and operations fit for your IaaS API (RHEV-M, vSphere, FGCP, CIMI).

For example, for RHEV-M we know that there is no separation of Network and Subnet. Furthermore, creation of Networks is an admin-level task and so the create_network method likely wouldn’t be exposed for the RHEV-M driver. With respect to placing an Instance into a particular Network I understand the procedure is similar to Openstack Quantum: create an instance and then create a network interface on that instance which is associated with a particular Network. I will likely be targetting the RHEV-M driver next to explore these issues further.

- How do we reconcile the fact that in some clouds, there is no ‘subnet’ entity (e.g. RHEV-M).

One implication is that for operations such as ‘create_instance’ where we may mandate that a user must supply both a network_id as well as a subnet_id. We could only require the subnet_id (with the network_id being deduced from that). However, this brings us back to the ‘what if there is no subnet’? Do we then map given IaaS provider’s ‘Network’ resource to the Deltacloud ‘Subnet’ (and only expose ‘Subnet’ but not ‘Network’ at the top-level /api entry point?)?

- Do we want to merge the Network and Subnet models?

The logic here is that for all the operations regarding an Instance and indeed the network_binding association of Instance <–> Network+Subnet involves both network_id and subnet_id. In a given IaaS provider that supports both Network and Subnet models, you associate your Instances with a Subnet, not a Network. So if you have 2 networks each containing 3 subnets, in effect you have a choice of 6 subnets to which an Instance can be associated - each Network+Subnet combination becomes one target for the association. This idea needs further exploration/discussion, though it may solve the issue with those providers that don’t support a 2 tier Networking model (like RHEV-M, CIMI).

blog comments powered by Disqus